The race for useful offsets

/We've been working on a 3D acquisition lately. One of the factors influencing the layout pattern of sources and receivers in a seismic survey is the range of useful offsets over the depth interval of interest. If you've got more than target depth, you'll have more than one range of useful offsets. For shallow targets this range is limited to small offsets, due to direct waves and first breaks. For deeper targets, the range is limited at far offsets by energy losses due to geometric spreading, moveout stretch, and system noise.

In seismic surveying, one must choose a spacing interval between geophones along a receiver line. If phones are spaced close together, we can collect plenty of samples in a small area. If the spacing is far apart, the sample density goes down, but we can collect data over a bigger area. So there is a trade-off and we want to maximize both; high sample density covering the largest possible area.

What are useful offsets?

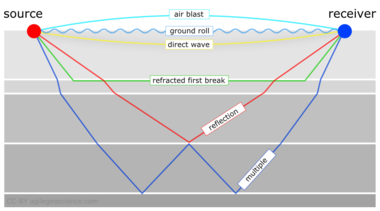

It isn't immediately intuitive why illuminating shallow targets can be troublesome, but with land seismic surveying in particular, first breaks and near surface refractions clobber shallow reflecting events. In the CMP domain, these are linear signals, used for determining statics, and are discarded by muting them out before migration. Reflections that arrive later than the direct wave and first refractions don't get muted out. But if these reflections arrive later than the air blast noise or ground roll noise — pervasive at near offsets — they get caught up in noise too. This region of the gather isn't muted like the top mute, otherwise you'd remove the data at near offsets. Instead, the gathers are attacked with algorithms to eliminate the noise. The extent of each hyperbola that passes through to migration is what we call the range of useful offsets.

The deepest reflections have plenty of useful offsets. However if we wanted to do adequate imaging somewhere between the first two reflections, for instance, then we need to make sure that we record redundant ray paths over this smaller range as well. We sometimes call this aperture; the shallow reflection is restricted in the number of offsets that it can be illuminated with, the deeper reflections can tolerate an aperture that is more open. In this image, I'm modelling the case of 60 geophones spanning 3000 metres, spaced evenly at 100 metres apart. This layout suggests merely 4 or 5 ray paths will hit the uppermost reflection, the shortest ray paths at small offsets. Also, there is usually no geophone directly on top of the source location to record a vertical ray path at zero offset. The deepest reflections however, should have plenty of fold, as long as NMO stretch, geometric spreading, and noise levels were good.

The problem with determining the range of useful offsets by way of a model is, not only does it require a velocity profile, which is easily attained from a sonic log, VSP, or velocity analysis, but it also requires an estimation of the the speed, intensity, and duration of the near surface events to be muted. Parameters that depend largely on the nature of the source and the local ground conditions, which vary from place to place, and from one season to the next.

In a future post, we'll apply this notion of useful offsets to build a pattern for shooting a 3D.

Click here for details on how I created this figure using the IPython Notebook. If you don't have it, IPython is easy to install. The easiest way is to install all of scientific Python, or use Canopy or Anaconda.

This post was inspired in part by Norm Cooper's 2004 article, A world of reality: Designing 3D seismic programs for signal, noise, and prestack time-migration. The Leading Edge23 (10), 1007-1014.DOI: 10.1190/1.1813357

Update on 2014-12-17 13:04 by Matt Hall

Don't miss the next installment — Laying out a seismic survey — with more IPython goodness!

Except where noted, this content is licensed

Except where noted, this content is licensed