On answering questions

/On Tuesday I wrote about asking better questions. One of the easiest ways to ask better questions is to hang back a little. In a lecture, the answer to your question may be imminent. Even if it isn't, some thinking or research will help. It's the same with answering questions. Better to think about the question, and maybe ask clarifying questions, than to jump right in with "Let me explain".

Here's a slightly edited example from Earth Science Stack Exchange:

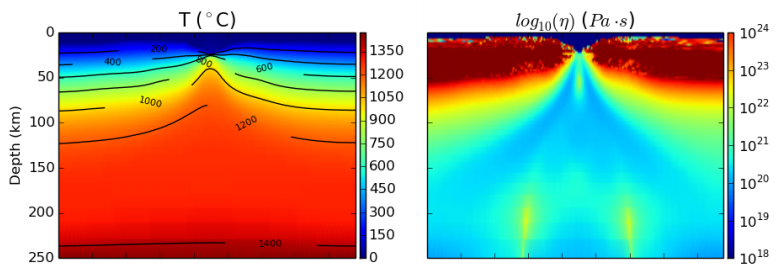

I suppose natural gas underground caverns on Earth have substantial volume and gas is in gaseous form there. I wonder how it would look like inside such cavern (with artificial light of course). Will one see a rocky sky at big distance?

The first answer was rather terse:

What is a good answer?

This answer, addressing the apparent misunderstanding the OP (original poster) has about gas being predominantly found in caverns, was the first thing that occurred to me too. But it's incomplete, and has other problems:

- It's not very patient, and comes across as rather dismissive. Not very welcoming for this new user.

- The reference is far from being an appropriate one, and seems to have been chosen randomly.

- It only addresses sandstone reservoirs, and even then only 'typical' ones.

In my own answer to the question, I tried to give a more complete answer. I tried to write down my principles, which are somewhat aligned with the advice given on the Stack Exchange site:

- Assume the OP is smart and interested. They were smart and curious enough to track down a forum and ask a question that you're interested enough in to answer, so give them some credit.

- No bluffing! If you find yourself typing something like, "I don't know a lot about this, but..." then stop writing immediately. Instead, send the question to someone you know that can give a better answer then you.

- If possible, answer directly and clearly in the first sentence. I usually write it in bold. This should be the closest you can get to a one-word answer, especially if it was a direct question.

- Illustrate the answer with an example. A picture or a numerical example — if possible with working code in an accessible, open source language — go a long way to helping someone get further.

- Be brief but thorough. Round out your answer with some different angles on the question, especially if there's nuance in your answer. There's no need for an essay, so instead give links and references if the OP wants to know more.

- Make connections. If there are people in your community or organization who should be connected, connect them.

It's remarkable how much effort people are willing to put into a great answer. A question about detecting dog paw-prints on a pressure pad, posted to the programming community Stack Overflow, elicited some great answers.

The thread didn't end there. Check out these two answers by Joe Kington, a programmer–geoscientist in Houston:

- One epic answer with code and animated GIFs, showing how to make a time-series of pawprints.

- A second answer, with more code, introducing the concept of eigenpaws to improve paw recognition.

A final tip: writing informative answers might be best done on Wikipedia or your corporate wiki. Instead of writing a long response to the post, think about writing it somewhere more accessible, and instead posting a link to your answer.

What do you think makes a good answer to a question? Have you ever received an answer that went beyond helpful?

Except where noted, this content is licensed

Except where noted, this content is licensed