Yesterday afternoon, I attended a talk at Dalhousie by Peter Cary who has begun the CSEG distinguished lecture tour series. Peter's work is well known in the seismic processing world, and he's now spreading his insights to the broader geoscience community. This was only his fourth stop out of 26 on the tour, so there's plenty of time to catch it.

Three steps of seismic processing

Three steps of seismic processing

In the head-spinning jargon of seismic processing, if you're lost, it's maybe not be your fault. Sometimes it might even seem like you're going in circles.

Ask the vendor or processing specialist first to keep it simple, and second to tell you in which of the three processing stages you are in. Seismic data processing has steps:

- Attenuate all types of noise.

- Remove the effects of the near surface.

- Migration, sometimes called imaging.

If time migration is the workshorse of seismic processing, and if is f–k filtering (or f–anything filtering) is the workhorse of noise attenuation, then surface consistent deconvolution is the workhorse of the near surface. These topics aren't as sexy or as new as FWI or compressed sensing, but Peter has been questioning the basics of surface-consistent scaling, and the approximations we make when processing land seismic data.

The ambiguity of phase and travel-time corrections

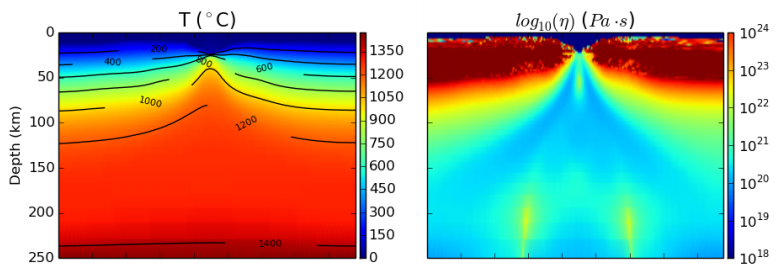

To the processor, removing the effects of the near surface means making things flat in the CMP domain. It turns out you can do this with travel time corrections (static shifts), you can do this with phase corrections, or you can do it with both.

A simple synthetic example showing (a) a gather with surface-consistent statics and phase variations; (b) the same gather after surface-consistent residual statics correction, and (c) after simultaneous surface-consistent statics and phase correcition. Image © Cary & Nagarajappa and CSEG.

A simple synthetic example showing (a) a gather with surface-consistent statics and phase variations; (b) the same gather after surface-consistent residual statics correction, and (c) after simultaneous surface-consistent statics and phase correcition. Image © Cary & Nagarajappa and CSEG.

It's troubling that there is more than one way to achieve flatness. Peter's advice is to use shot stacks and receiver stacks to compare the efficacy of static corrections. They eliminate doubt about whether surface consistent scaling is working, and are a better QC tool than other data domains.

Deeper than shallow

It may sound trivial, but the hardest part about using seismic waves for imaging is that they have to travel down and back up through the near surface on their path to the target. It might seem counter-intuitive, but the geometric configurations that work well for the deep earth are not well suited to the shallow earth, and how we might correct for it. I can imagine that two surveys could be useful, one for the target and one for characterizing the shallow that gets in the way of the target, but seismic experiments are already expensive enough when there is only target to be concerned with.

Still, the near surface is something we can't avoid. Much like astronomers using ground-based telescopes shooting for the stars, seismic processors too have to get the noisy stuff that is sitting closest to the detectors out of the way.

Except where noted, this content is licensed

Except where noted, this content is licensed