Geocomputing: Call for papers

/52 Things .+? Geocomputing is in the works.

For previous books, we've reached out to people we know and trust. This felt like the right way to start our micropublishing project, because we had zero credibility as publishers, and were asking a lot from people to believe anything would come of it.

Now we know we can do it, but personal invitation means writing to a lot of people. We only hear back from about 50% of everyone we write to, and only about 50% of those ever submit anything. So each book takes about 160 invitations.

This time, I'd like to try something different, and see if we can truly crowdsource these books. If you would like to write a short contribution for this book on geoscience and computing, please have a look at the author guidelines. In a nutshell, we need about 600 words before the end of March. A figure or two is OK, and code is very much encouraged. Publication date: fall 2015.

We would also like to find some reviewers. If you would be available to read at least 5 essays, and provide feedback to us and the authors, please let me know.

In keeping with past practice, we will be donating money from sales of the book to scientific Python community projects via the non-profit NumFOCUS Foundation.

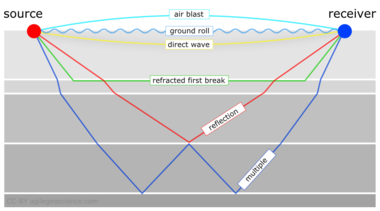

What the cover might look like. If you'd like to write for us, please read the author guidelines.

Except where noted, this content is licensed

Except where noted, this content is licensed