May linkfest

/The pick of the links from the last couple of months. We look for the awesome, so you don't have to :)

ICYMI on Pi Day, pimeariver.com wants to check how close river sinuosity comes to pi. (TL;DR — not very.)

If you're into statistics, someone at Imperial College London recently released a nice little app for stochastic simulations of simple calculations. Here's a back-of-the-envelope volumetric calculation by way of example. Good inspiration for our Volume* app.

I love it when people solve problems together on the web. A few days ago Chris Jackson (also at Imperial) posted a question about converting projected coordinates...

I responded with a code snippet that people quickly improved. Chris got several answers to his question, and I learned something about the pyproj library. Open source wins again!

In answering that question, I also discovered that Github now renders most IPython Notebooks. Sweet!

Speaking of notebooks, Beaker looks interesting: individual code blocks support different programming languages within the same notebook and allow you to pass data from one cell to another. For instance, you could do your basic stuff in Python, computationally expensive stuff in Julia, then render a visualization with JavaScript. Here's a simple example from their site.

Python is the language for science, but JavaScript certainly rules the visual side of the web. Taking after JavaScript data-artists like Bret Victor and Mike Bostock, Jack Schaedler has built a fantastic website called Seeing circles, sines, and signals containing visual explanations of signal processing concepts.

If that's not enough for you, there's loads more where that came from: Gallery of Concept Visualization. You're welcome.

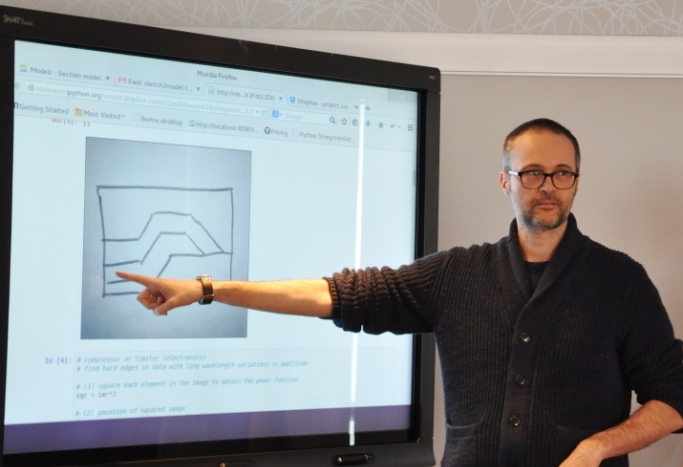

My recent notebook about finding small things with 2D seismic grids sparked some chatter on Twitter. People had some great ideas about modeling non-random distributions, like clustered or anisotropic populations. Lots to think about!

Getting help quickly is perhaps social media's most potent capability — though some people do insist on spoiling everything by sharing U might be a genius if u can solve this! posts (gah, stop it!). Earth Science Stack Exchange is still far from being the tool is can be, but there have been some relevant questions on geophysics lately:

- Why can Thomsen's parameter \(\epsilon\) be negative in VTI media?

- How should I choose the block size in constrained model-based inversion?

- Should one extract wavelets from seismic or well logs for the generation of synthetic traces?

- What are the fields in Petrel's IESX seismic horizon file?

- A couple of questions on mode conversion: one and two

A fun thread came up on Reddit too recently: Geophysics software you wish existed. Perfect for inspiring people at hackathons! I'm keeping a list of hacky projects for the next one, by the way.

Not much to say about 3D models in Sketchfab, other than: they're wicked! I mean, check out this annotated anticline. And here's one by R Mahon based on sedimentological experiments by John Shaw and others...

Rigid Lid Delta by rmahon1 on Sketchfab

Except where noted, this content is licensed

Except where noted, this content is licensed