Isn't everything on the internet free?

/A couple of weeks ago I wrote about a new publication from Elsevier. The book seems to contain quite a bit of unlicensed copyrighted material, collected without proper permission from public and private groups on LinkedIn, SPE papers, and various websites. I had hoped to have an update for you today, but the company is still "looking into" the matter.

The comments on that post, and on Twitter, raised some interesting views. Like most views, these views usually come in pairs. There is a segment of the community that feels quite enraged by the use of (fully attributed) LinkedIn comments in a book; but many people hold the opposing view, that everything on the Internet is fair game.

I sympathise with this permissive view, to an extent. If you put stuff on the web, people are (one hopes) going to see it, interpret it, and perhaps want to re-use it. If they do re-use it, they may do so in ways you did not expect, or perhaps even disagree with. This is okay — this is how ideas develop.

I mean, if I can't use a properly attributed LinkedIn post as the basis for a discussion, or a YouTube video to illustrate a point, then what's really the point of those platforms? It would undermine the idea of the web as a place for interaction and collaboration, for cultural or scientific evolution.

Freely accessible but not free

Not to labour the point, but I think we all understand that what we put on the Internet is 'out there'. Indeed, some security researchers suggest you should assume that every email you type will be in the local newspaper tomorrow morning. This isn't just 'a feeling', it's built into how the web works. most websites are exclusively composed of strictly copyrighted content, but most websites also have conspicuous buttons to share that copyrighted content — Tweet this, Pin that, or whatever. The signals are confusing... do you want me to share this or not?

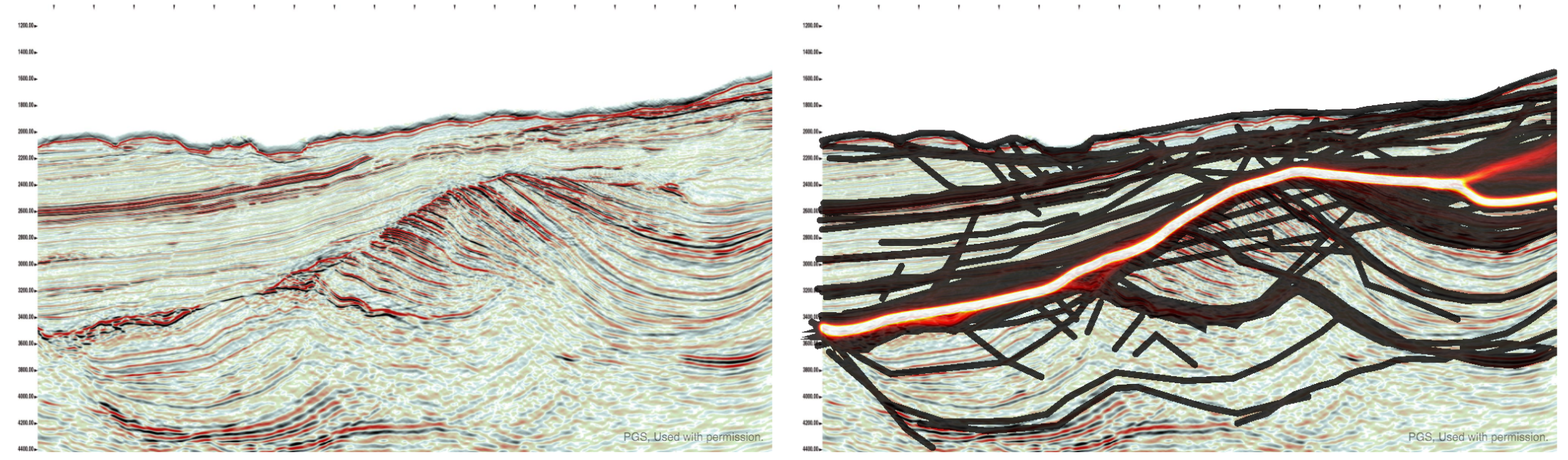

One can definitely get carried away with the idea that everything should be free. There's a spectrum of infractions. On the 'everyday abuse' end of things, we have the point of view that grabbing randoms images from the web and putting the URL at the bottom is 'good enough'. Based on papers at conferences, I suspect that most people think this and, as I explained before, it's definitely not true: you usually need permission.

At the other end of the scale, you end up with Sci-Hub (which sounds like it's under pressure to close at the moment) and various book-sharing sites, both of which I think are retrograde and anti-open-access (as well as illegal). I believe we should respect the copyright of others — even that of supposedly evil academic publishers — if we want others to respect ours.

So what's the problem with a bookful of LinkedIn posts and other dubious content? Leaving aside for now the possibility of more serious plagiarism, I think the main problem is simply that the author went too far — it is a wholesale rip-off of 350 people's work, not especially well done, with no added value, and sold for a hefty sum.

Best practice for re-using stuff on the web

So how do we know what is too far? Is it just a value judgment? How do you re-use stuff on the web properly? My advice:

- Stop it. Resist the temptation to Google around, grabbing whatever catches your eye.

- Re-use sparingly, only using one or two of the real gems. Do you really need that picture of a casino on your slide entitled "Risk and reward"? (No, you definitely don't.)

- Make your own. Ideas are not copyrightable, so it might be easier to copy the idea and make the thing you want yourself (giving credit where it's due, of course).

- Ask for permission from the creator if you do use someone's stuff. Like I said before, this is only fair and right.

- Go open! Preferentially share things by people who seem to be into sharing their stuff.

- Respect the work. Make other people's stuff look awesome. You might even...

- ...improve the work if you can — redraw a diagram, fix a typo — then share it back to them and the community.

- Add value. Add real insight, combine things in new ways, surprise and delight the original creators.

- And finally, if you're not doing any of these things, you better not be trying to profit from it.

Everything on the Internet is not free. My bet is that you'll be glad of this fact when you start putting your own stuff out there. We can all do our homework and model good practice. This is especially important for those people in influential positions in academia, because their behaviours rub off on so many impressionable people.

We talked to Fernando Enrique Ziegler on the Undersampled Radio podcast last week. He was embroiled in the 'bad book' furore too, in fact he brought it to many people's attention. So this topic came up in the show, as well as a lot of stuff about pore pressure and hurricanes. Check it out...

Except where noted, this content is licensed

Except where noted, this content is licensed