Q is for Q

/Quality factor, or \(Q\), is one of the more mysterious quantities of seismology. It's right up there with Lamé's \(\lambda\) and Thomsen's \(\gamma\). For one thing, it's wrapped up with the idea of attenuation, and sometimes the terms \(Q\) and 'attenuation' are bandied about seemingly interchangeably. For another thing, people talk about it like it's really important, but it often seems to be completely ignored.

A quick aside. There's another quality factor: the rock quality factor, popular among geomechnicists (geomechanics?). That \(Q\) describes the degree and roughness of jointing in rocks, and is probably related — coincidentally if not theoretically — to seismic \(Q\) in various nonlinear and probably profound ways. I'm not going to say any more about it, but if this interests you, read Nick Barton's book, Rock Quality, Seismic Velocity, Attenuation and Anistropy (2006; CRC Press) if you can afford it.

So what is Q exactly?

We know intuitively that seismic waves lose energy as they travel through the earth. There are three loss mechanisms: scattering (elastic losses resulting from reflections and diffractions), geometrical spreading, and intrinsic attenuation. This last one, anelastic energy loss due to absorption — essentially the deviation from perfect elasticity — is what I'm trying to describe here.

I'm not going to get very far, by the way. For the full story, start at the seminal review paper entitled \(Q\) by Leon Knopoff (1964), which surely has the shortest title of any paper in geophysics. (Knopoff also liked short abstracts, as you see here.)

The dimensionless seismic quality factor \(Q\) is defined in terms of the energy \(E\) stored in one cycle, and the change in energy — the energy dissipated in various ways, such as fluid movement (AKA 'sloshing', according to Carl Reine's essay in 52 Things... Geophysics) and intergranular frictional heat ('jostling') — over that cycle:

$$ Q \stackrel{\mathrm{def}}{=} 2 \pi \frac{E}{\Delta E} $$

Remarkably, this same definition holds for any resonator, including pendulums and electronics. Physics is awesome!

Because the right-hand side of that relationship is sort of upside down — the loss is in the denominator — it's often easier to talk about \(Q^{-1}\) which is, more or less, the percentage loss of energy in a single wavelength. This inverse of \(Q\) is proportional to the attenuation coefficient. For more details on that relationship, check out Carl Reine's essay.

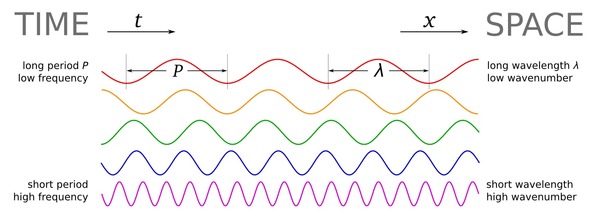

This connection with wavelengths means that we have to think about frequency. Because high frequencies have shorter cycles (by definition), they attenuate faster than low frequencies. You know this intuitively from hearing the beat, but not the melody, of distant music for example. This effect does not imply that \(Q\) depends on frequency... that's a whole other can of worms. (Confused yet?)

The frequency dependence of \(Q\)

It's thought that \(Q\) is roughly constant with respect to frequency below about 1 Hz, then increases with \(f^\alpha\), where \(\alpha\) is about 0.7, up to at least 25 Hz (I'm reading this in Mirko van der Baan's 2002 paper), and probably beyond. Most people, however, seem to throw their hands up and assume a constant \(Q\) even in the seismic bandwidth... mainly to make life easier when it comes to seismic processing. Attempting to measure, let alone compensate for, \(Q\) in seismic data is, I think it's fair to say, an unsolved problem in exploration geophysics.

Why is it worth solving? I think the main point is that, if we could model and measure it better, it could be a semi-independent measure of some rock properties we care about, especially velocity. Actually, I think it's even a stretch to call velocity a rock property — most people know that velocity depends on frequency, at least across the gulf of frequencies between seismic and acoustic logging tools, but did you know that velocity also depends on amplitude? Paul Johnson tells about this effect in his essay in the forthcoming 52 Things... Rock Physics book — stay tuned for more on that.

For a really wacky story about negative values of \(Q\) — which imply transmission coefficients greater than 1 (think about that) — check out Chris Liner's essay in the same book (or his 2014 paper in The Leading Edge). It's not going to help \(Q\) get any less mysterious, but it's a good story. Here's the punchline from a Jupyter Notebook I made a while back; it follows along with Chris's lovely paper:

Top: Velocity and the Backus average velocity in the E-38 well offshore Nova Scotia. Bottom: Layering-induced attenuation, or 1/Q, in the same well. Note the negative numbers! Reproduction of Liner's 2014 results in a Jupyter Notebook.

Hm, I had hoped to shed some light on \(Q\) in this post, but I seem to have come full circle. Maybe explaining \(Q\) is another unsolved problem.

References

Barton, N (2006). Rock Quality, Seismic Velocity, Attenuation and Anisotropy. Florida, USA: CRC Press. 756 pages. ISBN 9780415394413.

Johnson, P (in press). The astonishing case of non-linear elasticity. In: Hall, M & E Bianco (eds), 52 Things You Should Know About Rock Physics. Nova Scotia: Agile Libre, 2016, 132 pp.

Knopoff, L (1964). Q. Reviews of Geophysics 2 (4), 625–660. DOI: 10.1029/RG002i004p00625.

Reine, C (2012). Don't ignore seismic attenuation. In: Hall, M & E Bianco (eds), 52 Things You Should Know About Geophysics. Nova Scotia: Agile Libre, 2012, 132 pp.

Liner, C (2014). Long-wave elastic attenuation produced by horizontal layering. The Leading Edge 33 (6), 634–638. DOI: 10.1190/tle33060634.1. Chris also blogged about this article.

Liner, C (in press). Negative Q. In: Hall, M & E Bianco (eds), 52 Things You Should Know About Rock Physics. Nova Scotia: Agile Libre, 2016, 132 pp.

van der Bann, M (2002). Constant Q and a fractal, stratified Earth. Pure and Applied Geophysics 159 (7–8), 1707–1718. DOI: 10.1007/s00024-002-8704-0.

Except where noted, this content is licensed

Except where noted, this content is licensed