Times are rock hard in industry right now.

If you have a job, you're lucky — you have probably already survived one round of layoffs. There will likely be more, especially when the takeovers start, which they will. I hope you survive those too.

If you don't have a job, you probably feel horrible, but of course that won't get you anywhere. I heard one person call it an 'involuntary sabbatical', and I love that: it's the best chance you'll get to re-invent, re-learn, and find new direction.

If you're a student, you must be looking out over the wasteland and wondering what's in store for you. What on earth?

More than one person has asked me recently about Agile. "You got out," they say, "how did you do it?" So instead of bashing out another email, I thought I'd blog about it.

Consulting in 2015

I didn't really get out, of course, I just quit and moved to rural Nova Scotia.

Living out here does make it harder to make a living, and things on this side of the fence, so to speak, are pretty gross too I'm afraid. Talking to others at SEG suggested that I'm not alone among small companies in this view. A few of the larger outfits seem to be doing well: IKON and GeoTeric for instance, but they also have product, which at least offers some income diversity.

Agile started as a 100% bootstrapped effort to be a consulting firm that's more directly useful to individual professional geoscientists than anyone else. Most firms target corporate accounts and require permission, a complicated contract, an AFE, and 3 months of bureaucracy to hire. It turns out that professionals are unable or unwilling to engage on that lower, grass-roots level, though — turns out almost everyone thinks you actually need permission, contracts, AFEs, etc, to get hired in any capacity, even just "Help me tie this well." So usually we are hired into larger, longer-term projects, just like anyone else.

I still think there's something in this original idea — the Uberification of consulting services, if you will — maybe we'll try again in a few years.

But if you are out of work and were thinking of getting out there as a consultant... I'm an optimistic person, but unless you are very well known (for being awesome), it's hard for me to honestly recommend even trying. It's just not the reality right now. We've been lucky so far, because we work in geothermal and government as well as in petroleum, but oil & gas was over half our revenue last year. It will be about 0% of it this year, maybe slightly less.

The transformation of Agile

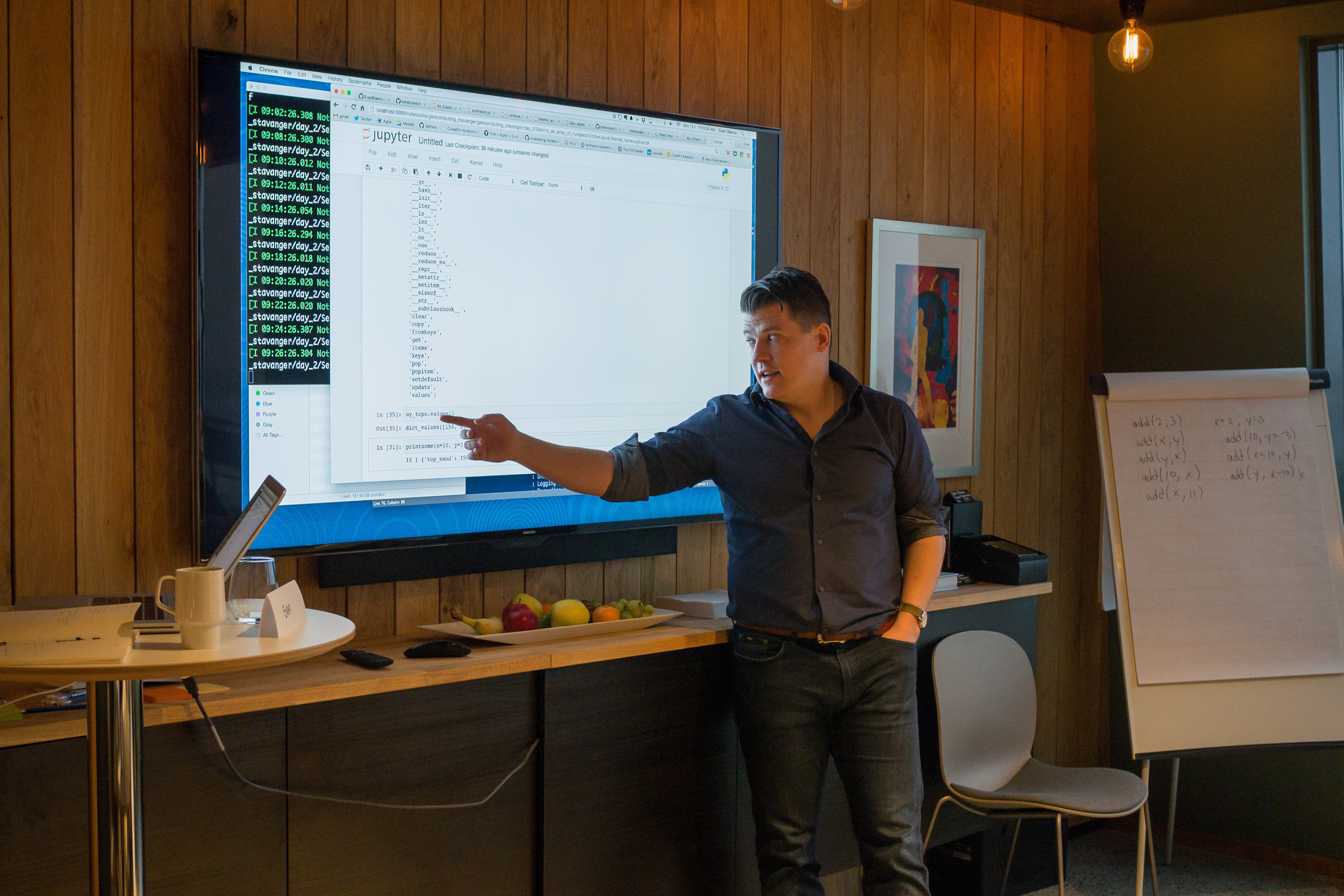

All of which is to explain why we are now, since January, consciously and deliberately turning ourselves into a software technology R&D company. The idea is to be less dependent on our dysfunctional industry, and less dependent on geotechnical work. We build new tools for hard problems — data problems, interpretation problems, knowledge sharing problems. And we're really good at it.

We hired another brilliant programmer in August, and we're all learning more every day about our playground of scientific computing and the web — machine learning, cloud services, JavaScript frameworks, etc. The first thing we built was modelr.io, which is still in active development. Our latest project is around our tool pickthis.io. I hope it works out because it's the most fun I've had on a project in ages. Maybe these projects spin out of Agile, maybe we keep them in-house.

So that's our survival plan: invent, diversify, and re-tool like crazy. And keep blogging.

F**k it

Some people are saying, "things will recover, sit it out" but I think that's awful — the very worst — advice. I honestly think your best bet right now* is to find an accomplice, set aside 6 months and some of your savings, push everything off your desk, and do something totally audacious.

Something you can't believe no-one has thought of doing yet.

Whatever it was you just thought of — that's the thing.

You might as well get started.

Except where noted, this content is licensed

Except where noted, this content is licensed