Unweaving the rainbow

/Last week at the Canada GeoConvention in Calgary I gave a slightly silly talk on colourmaps with Matteo Niccoli. It was the longest, funnest, and least fruitful piece of research I think I've ever embarked upon. And that's saying something.

Freeing data from figures

It all started at the Unsession we ran at the GeoConvention in 2013. We asked a roomful of geoscientists, 'What are the biggest unsolved problems in petroleum geoscience?'. The list we generated was topped by Free the data, and that one topic alone has inspired several projects, including this one.

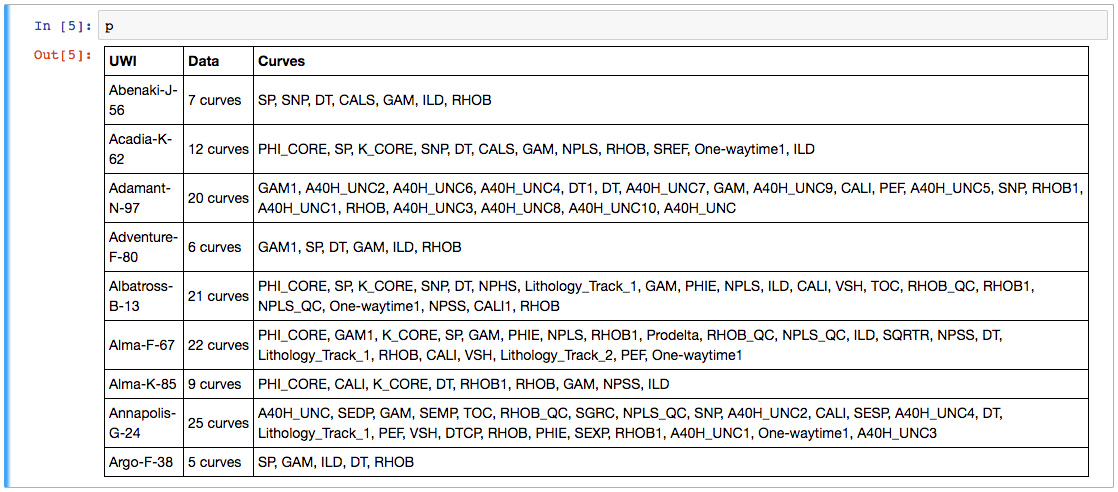

Our goal: recover digital data from any pseudocoloured scientific image, without prior knowledge of the colourmap.

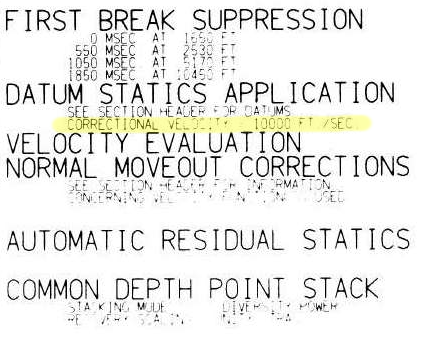

I subsequently proferred this challenge at the 2015 Geophysics Hackathon in New Orleans, and a team from Colorado School of Mines took it on. Their first step was to plot a pseudocoloured image in (red, green blue) space, which reveals the colourmap and brings you tantalizingly close to retrieving the data. Or so it seems...

Here's our talk:

Except where noted, this content is licensed

Except where noted, this content is licensed