All the elastic moduli

/An elastic modulus is the ratio of stress (pressure) to strain (deformation) in an isotropic, homogeneous elastic material:

$$ \mathrm{modulus} = \frac{\mathrm{stress}}{\mathrm{strain}} $$

OK, what does that mean?

Elastic means what you think it means: you can deform it, and it springs back when you let go. Imagine stretching a block of rubber, like the picture here. If you measure the stress \(F/W^2\) (i.e. the pressure is force per unit of cross-sectional area) and strain \(\Delta L/L\) (the stretch as a proportion) along the direction of stretch ('longitudinally'), then the stress/strain ratio gives you Young's modulus, \(E\).

Since strain is unitless, all the elastic moduli have units of pressure (pascals, Pa), and is usually on the order of tens of GPa (billions of pascals) for rocks.

The other elastic moduli are:

Shear modulus, \(\mu\) or \(G\), a measure of shear elasticity.

1st Lamé parameter, \(\lambda\), which famously 'has no physical interpretation'. I bet Bill Goodway would disagree: he calls \(\lambda\) "a pure, unambiguous and true measure of incompressibility, as it relates normal axial and lateral strain to uniaxial normal stress" (CSEG Recorder, June 2014).

Bulk modulus, \(K\) a measure of volumetric elasticity.

P-wave modulus, \(M\), a measure of longitudinal elasticity that shows up — as \(\lambda + 2\mu\) — all over the place in seismology, for example in the isotropic elastic wave equation. Still, it doesn't get a lot of love.

There's another quantity that doesn't fit our definition of a modulus, and doesn't have units of pressure — in fact it's unitless — but is always lumped in with the others:

Poisson's ratio, \(\nu\), the ratio of transverse to longitudinal strain.

What does this have to do with my data?

Interestingly, and usefully, the elastic properties of isotropic materials are described completely by any two moduli. This means that, given any two, we can compute all of the others. More usefully still, we can also relate them to \(V_\mathrm{P}\), \(V_\mathrm{S}\), and \(\rho\). This is great because we can get at those properties easily via well logs and less easily via seismic data. So we have a direct path from routine data to the full suite of elastic properties.

The only way to measure the elastic moduli themselves is on a mechanical press in the laboratory. The rock sample can be subjected to confining pressures, then squeezed or stretched along one or more axes. There are two ways to get at the moduli:

Directly, via measurements of stress and strain, so called static conditions.

Indirectly, via sonic measurements and the density of the sample. Because of the oscillatory and transient nature of the sonic pulses, we call these dynamic measurements. In principle, these should be the most comparable to the measurements we make from well logs or seismic data.

Let's see the equations then

The elegance of the relationships varies quite a bit. Shear modulus \(\mu\) is just \(\rho V_\mathrm{S}^2\), but Young's modulus is not so pretty:

$$ E = \frac{\rho V_\mathrm{S}^2 (3 V_\mathrm{P}^2 - 4 V_\mathrm{S}^2) }{V_\mathrm{P}^2 - V_\mathrm{S}^2} $$

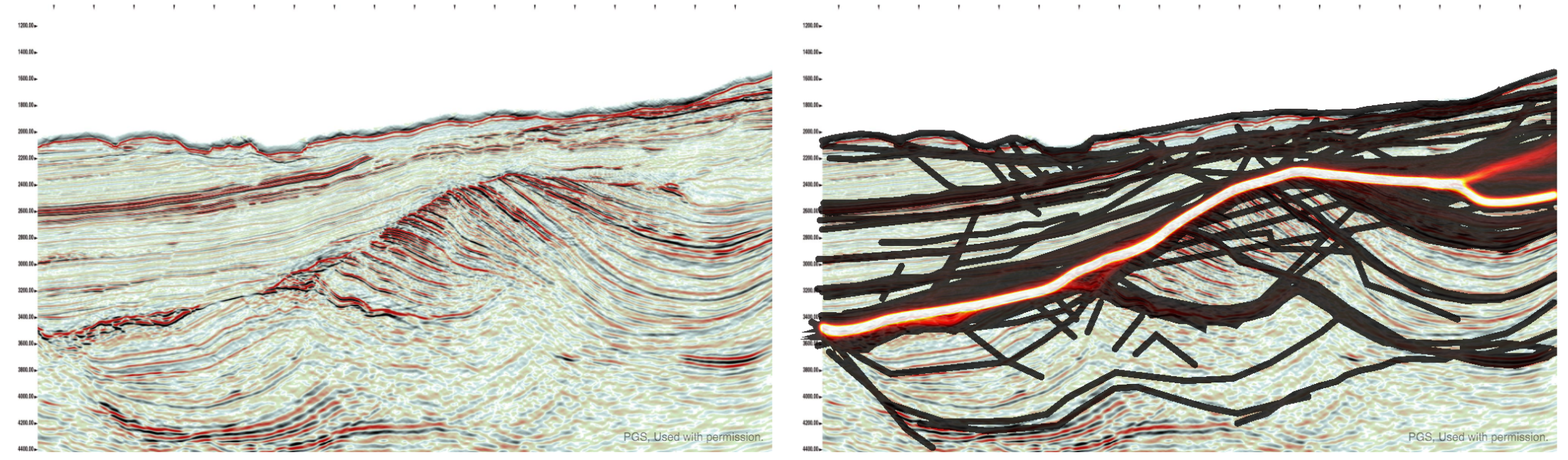

You can see most of the other relationships in this big giant grid I've been slowly chipping away at for ages. Some of it is shown below. It doesn't have most of the P-wave modulus expressions, because no-one seems too bothered about P-wave modulus, despite its obvious resemblance to acoustic impedance. They are in the version on Wikipedia, however (but it lacks the \(V_\mathrm{P}\) and \(V_\mathrm{S}\) expressions).

In this table, the mysterious quantity \(X\) is given by:

$$ X = \sqrt{9\lambda^2 + 2E\lambda + E^2} $$

In the next post, I'll come back to this grid and tell you how I've been deriving all these equations using Python.

Top tip... To find more posts on rock physics, click the Rock Physics tag below!

Except where noted, this content is licensed

Except where noted, this content is licensed