Visual explanations of mathematics

/It is thought that Euclid wrote Elements in about 300 BC, but Oliver Byrne turned it into one of the true gems of visualization — and made it about 100 times more readable. By seamlessly combining typeset text (Caslon, if you’re interested) with minimalist geometric drawings in primary colours, he didn’t just reproduce the text; he explained it in a new way.

If you like the look of it, it’s even cooler in Nicholas Rougeur’s beautiful interactive version.

This is a classic example of what Edward Tufte, the modern saint of visualization, calls a visual explanation (he wrote a whole book about the subject). We’ve written about the subject before (for example, see Evan’s 2014 post, Graphics that repay careful study). Figures and charts should do more than merely illustrate, they should elucidate.

Too often, equations — for example the myriad equations in any volume of GEOPHYSICS — do not elucidate. Indeed, they barely even illustrate. In some cases, it’s worse: they obfuscate. You might think mathematics is too dry, or too steeped in convention, for it to be any other way. Equations just are. But Byrne showed us that we can do better.

A few years ago, in an attempt to broaden my geophysical knowledge, I bought a copy of Daniel Fleisch’s book on Maxwell’s equations. It’s excellent, and the others in the series are good too. I especially liked the annotated equations; I’ve lightened the annotations in this version, to put them on a separate visual ‘layer’:

In 2010, Randall Munroe of xkcd applied a similar strategy to label The Flake Equation, his parody of the Drake equation:

There are still other examples out there.

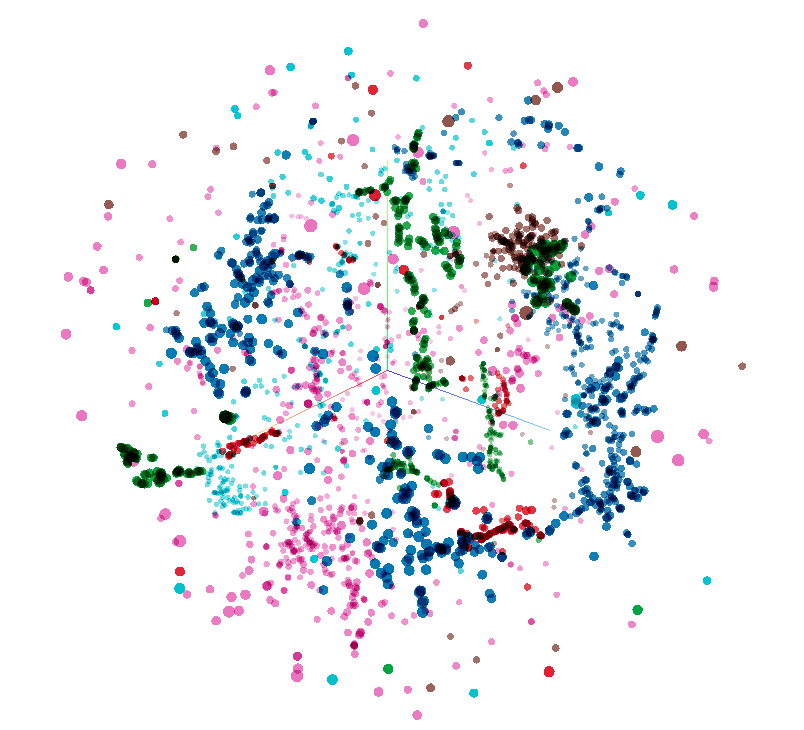

Later, I came across some lovely colourized equations by Stuart Riffle, a game developer. There was a bit of buzz about them on social media. Most people loved them, but a few pointed out that they suffer from the ‘legend lookup’ problem, and the colours he chose might not be great for colourblind people. Still, I like the concept — here’s the Fourier transform:

Direct annotation, something Tufte always advocates, avoids the legend lookup problem. In his 2016 Geophysics Tutorial on finite volume methods, Rowan Cockett showed that colour and labels can work together:

And in his Observable post on the predator–prey interaction, modern visualization legend Mike Bostock avoids the problem entirely with the use of pictograms: direct representation of what the symbols represent:

Observable is interesting because the documents are runnable code. And this reminds us that mathematics — equations, data structures, and so on — has another expression: code. While symbolic representation speaks directly to some people, code speaks to others, probably more. Look at Randall Munroe’s annotation of a Wolfram Alpha equation (similar to an Excel formula) from his (wonderful) book, What If:

What I love about this is the direct path to exploring the function yourself. It would take me an hour to implement Fleisch’s electric field integral in code, even with the annotations. Typing in this — admittedly less useful — rocket golf equation will take me two minutes. Expressing mathematics in code is the ultimate explicit and practical expression of an idea.

We have lots of tools to write better mathematics: LaTeX, markdown, Jupyter Notebooks, and so on. But it feels like nothing has really converged yet. Technology that seamlessly mixes symbolic equations, illustrative-and-explicative annotation, and runnable code is, I am sure, not far off. Until then, we do the best we can with the tools we have.

Have you seen nice examples of annotated equations? I’d love to hear about them; let me know in the comments!

Don’t miss the follow-up post from 2021: Illuminated equations.

The work by Byrne is out of copyright. Those by Munroe and Cockett are openly licensed under Creative Commons. The work of Fleisch and Bostock are used in accordance with Fair Use doctrine.

Except where noted, this content is licensed

Except where noted, this content is licensed