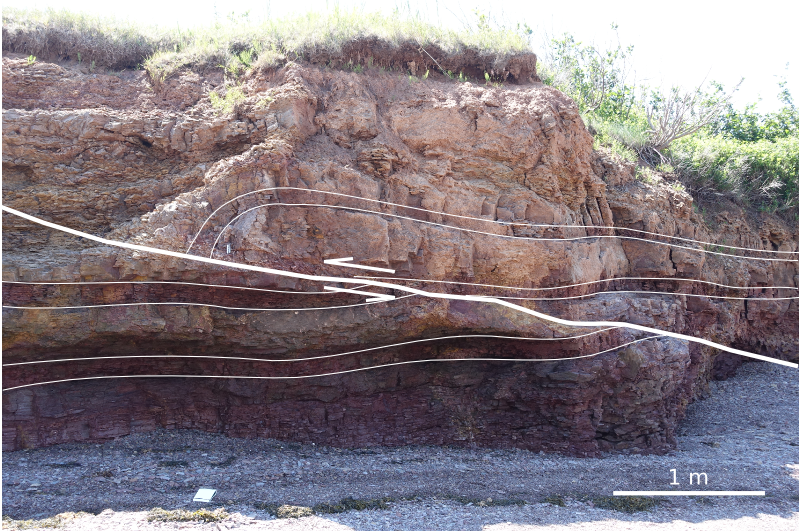

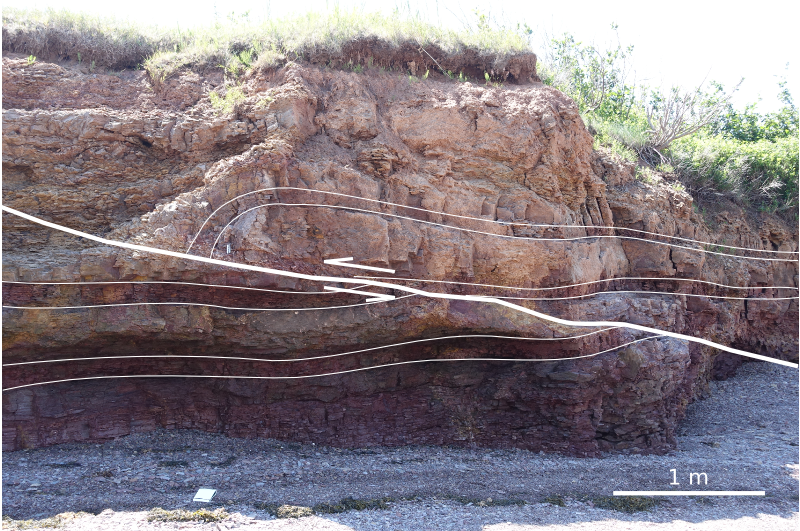

Where is the ground?

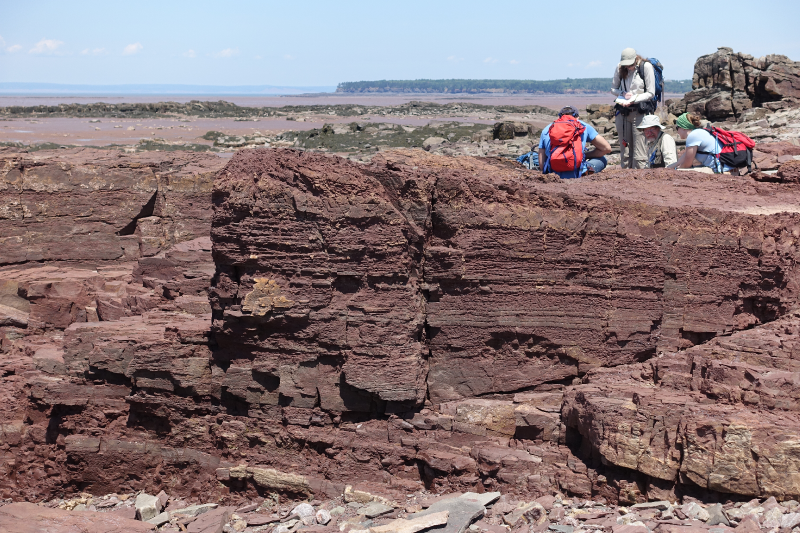

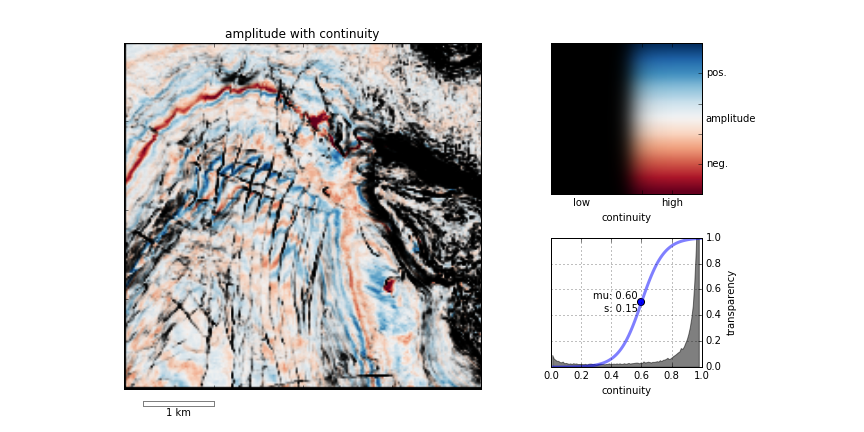

/This is the upper portion of a land seismic profile in Alaska. Can you pick a horizon where the ground surface is? Have a go at pickthis.io.

Pick the Ground surface at the top of the seismic section at pickthis.io.

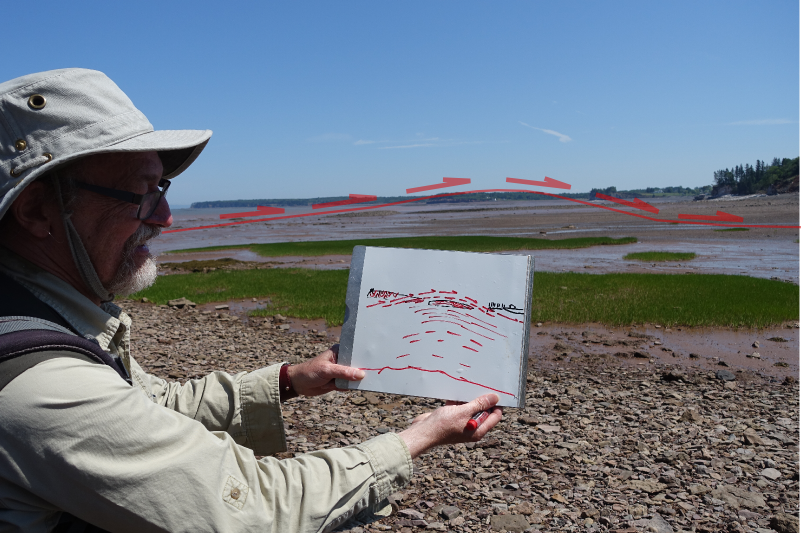

Picking the ground surface on land-based seismic data is not straightforward. Picking the seafloor reflection on marine data, on the other hand, is usually a piece of cake, a warm-up pick. You can often auto-track the whole thing with a few seeds.

Seafloor reflection on Penobscot 3D survey, offshore Nova Scotia. from Matt's tutorial in the April 2016 The Leading Edge, The function of interpolation.

Why aren't interpreters more nervous that we don't know exactly where the surface of the earth is? I'm sure I'm not the only one that would like to have this information while interpreting. Wouldn't it be great if land seismic were more like marine?

Treacherously Jagged TopographY or Near-Surface processing ArtifactS?

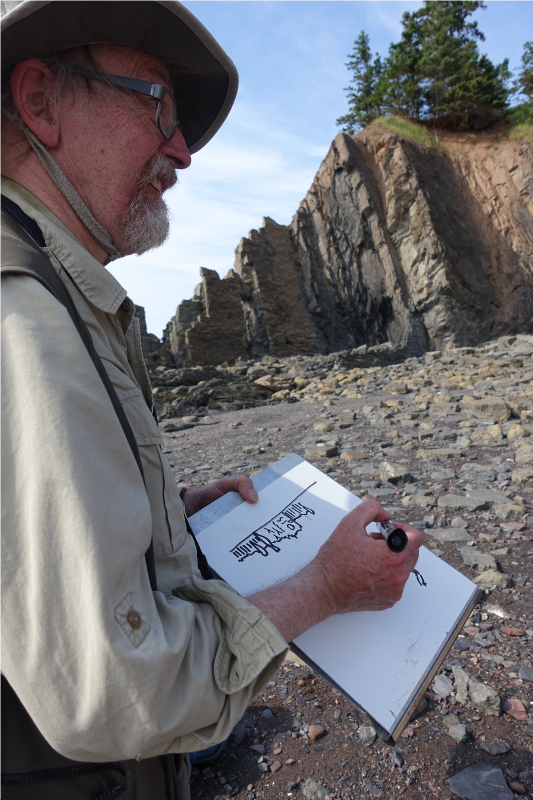

If you're new to land-based seismic data, you might notice that there isn't a nice pickable event across the top of the section like we find in marine seismic data. Shot noise at the surface has been muted (deleted) in processing, and the low fold produces an unclean, jagged look at the top of the section. Additionally, the top of the section, time-zero — the seismic reference datum — usually floats somewhere above the land surface — and we can't know where that is unless it can be found in the file header, or looked up in the processing report.

The seismic reference datum, at a two-way time of zero seconds on seismic data, is typically set at mean sea level for offshore data. For land data, it is usually chosen to 'float' above the land surface.

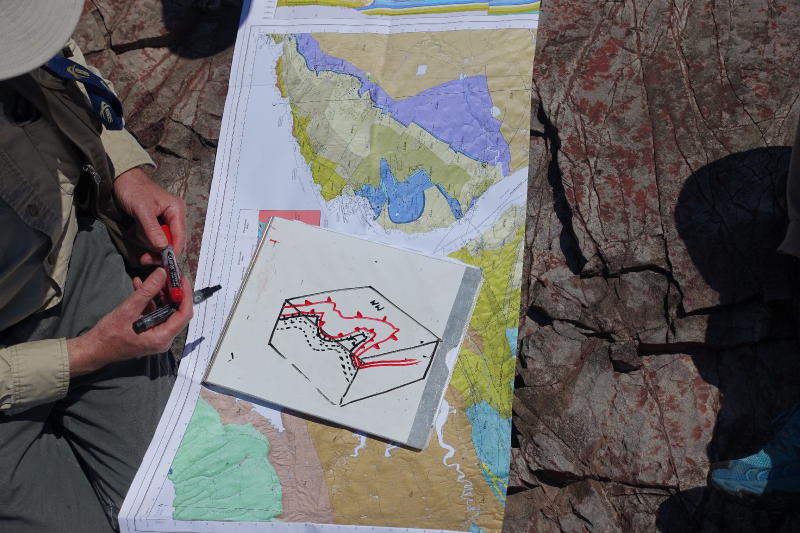

Reframing the question

This challenge is a bit of a trick question. It begs the viewer to recognize that the seemingly simple task of mapping the ground level on a land seismic section is actually a rudimentary velocity modeling or depth conversion exercise in itself. Wouldn't it be nice to have the ground surface expressed as pickable seismic event? Shouldn't we have it always in our images? Baked into our data, so to speak, such that we've always got an unambiguous pick? In the next post, I'll illustrate what I mean and show what's involved in putting it in.

In the meantime, I challenge you to pick where you think the (currently absent) ground surface is on this profile, so in the next post we can see how well you did.

Except where noted, this content is licensed

Except where noted, this content is licensed