x lines of Python: contour maps

/Difficulty rating: EASY

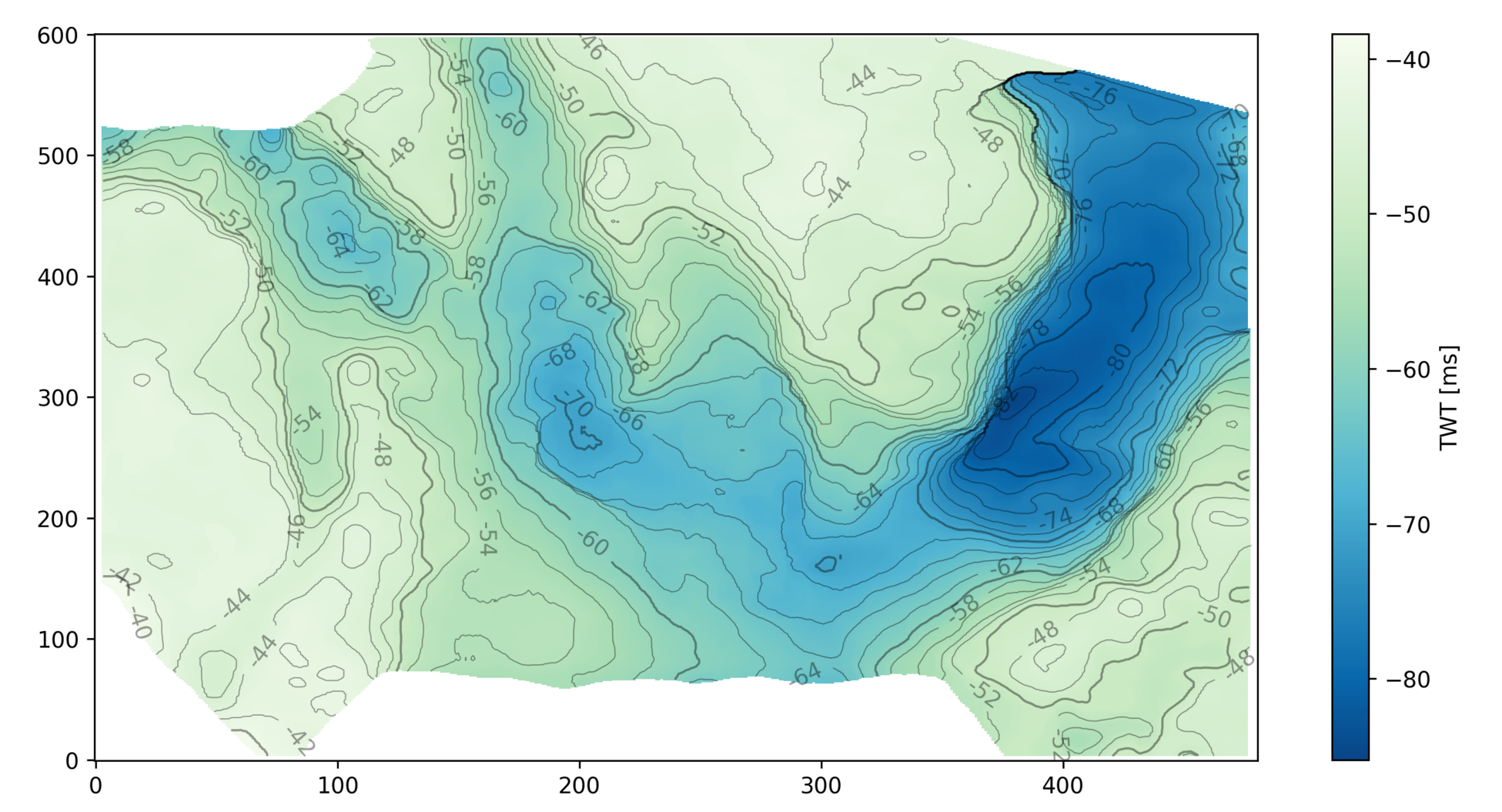

Following on from the post a couple of weeks ago about colourmaps, I wanted to poke into contour maps a little more. Ostensibly, making a contour plot in matplotlib is a one-liner:

plt.contour(data)But making a contour plot look nice takes a little more work than most of matplotlib's other plotting functions. For example, to change the contour levels you need to make an array containing the levels you want... another line of code. Adding index contours needs another line. And then there's all the other plotty stuff.

Here's what we'll do:

- Load the data from a binary NumPy file.

- Check the data looks OK.

- Get the min and max values from the map.

- Generate the contour levels.

- Make a filled contour map and overlay contour lines.

- Make a map with index contours and contour labels.

The accompanying notebook sets out all the code you will need. You can even run the code right in your browser, no installation required.

Here's the guts of the notebook:

import numpy as np import matplotlib.pyplot as plt seabed = np.load('../data/Penobscot_Seabed.npy') seabed *= -1 mi, ma = np.floor(np.nanmin(seabed)), np.ceil(np.nanmax(seabed)) step = 2 levels = np.arange(10*(mi//10), ma+step, step) lws = [0.5 if level % 10 else 1 for level in levels] # Make the plot fig = plt.figure(figsize=(12, 8)) ax = fig.add_subplot(1,1,1) im = ax.imshow(seabed, cmap='GnBu_r', aspect=0.5, origin='lower') cb = plt.colorbar(im, label="TWT [ms]") cb.set_clim(mi, ma) params = dict(linestyles='solid', colors=['black'], alpha=0.4) cs = ax.contour(seabed, levels=levels, linewidths=lws, **params) ax.clabel(cs, fmt='%d') plt.show()

This produces the following plot:

Except where noted, this content is licensed

Except where noted, this content is licensed