Running away from easy

/Matt and I are in Calgary at the 2017 GeoConvention. Instead of writing about highlights from Day 1, I wanted to pick on one awesome thing I saw. Throughout the convention, there is a air of sadness, of nostalgia, of struggle. But I detect a divide among us. There are people who are waiting for things to return to how they were, when life was easy. Others are exploring how to be a part of the change, instead of a victim of it. Things are no longer easy, but easy is boring.

Want to start an oil and gas company? What resources are you going to need? Computers, pricey software applications, data. Purchase all of this stuff as a one-time capital expense, build a team, get an office lease, buy desks and a Keurig. Then if all goes well, 18 months later you'll have a slide deck outlining a play that you could pitch to investors.

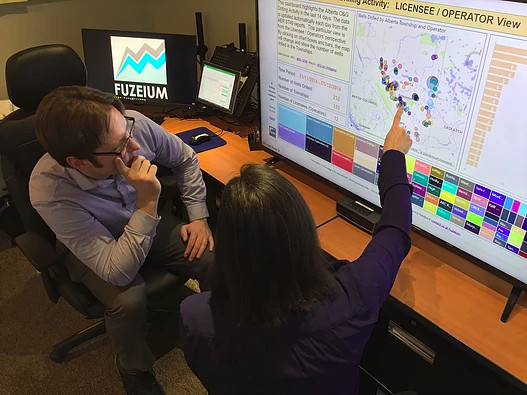

Imagine getting started without laying down a huge amount of capital for all those things. What if you could rent a desk at a co-working space, access the suite of software tools that you're used to, and use their Keurig. The computer infrastructure and software is managed and maintained by an IT service company so you don't have to worry about it.

Yesterday at the Calgary Geoconvention I heard all about ReSourceYYC, a co-working space catering to oil and gas professionals, introduced ResourceNET, a subscription-based cloud workstation environment for freelancers, consultants, startups, and the newly and not-so-newly underemployed community of subsurface professionals.

In making this offering, ReSourceYYC has partnered up with a number of software companies: Entero, Seisware, Surfer, ValNav, geoLOGIC, and Divestco, to name a few. The limitations and restrictions around this environment, if any, weren't totally clear. I wondered: Could I append or swap my own tools with this stack? Can I access this environment from anywhere?

It could be awesome. I think it could serve just as many freelancers and consultants as "oil and gas startups". It seems a bit too early to say, but I reckon there are literally thousands of geoscientists and engineers in Calgary that'd be all over this.

I think it's interesting and important and I hope they get it right.

Except where noted, this content is licensed

Except where noted, this content is licensed