The Scottish hackathon

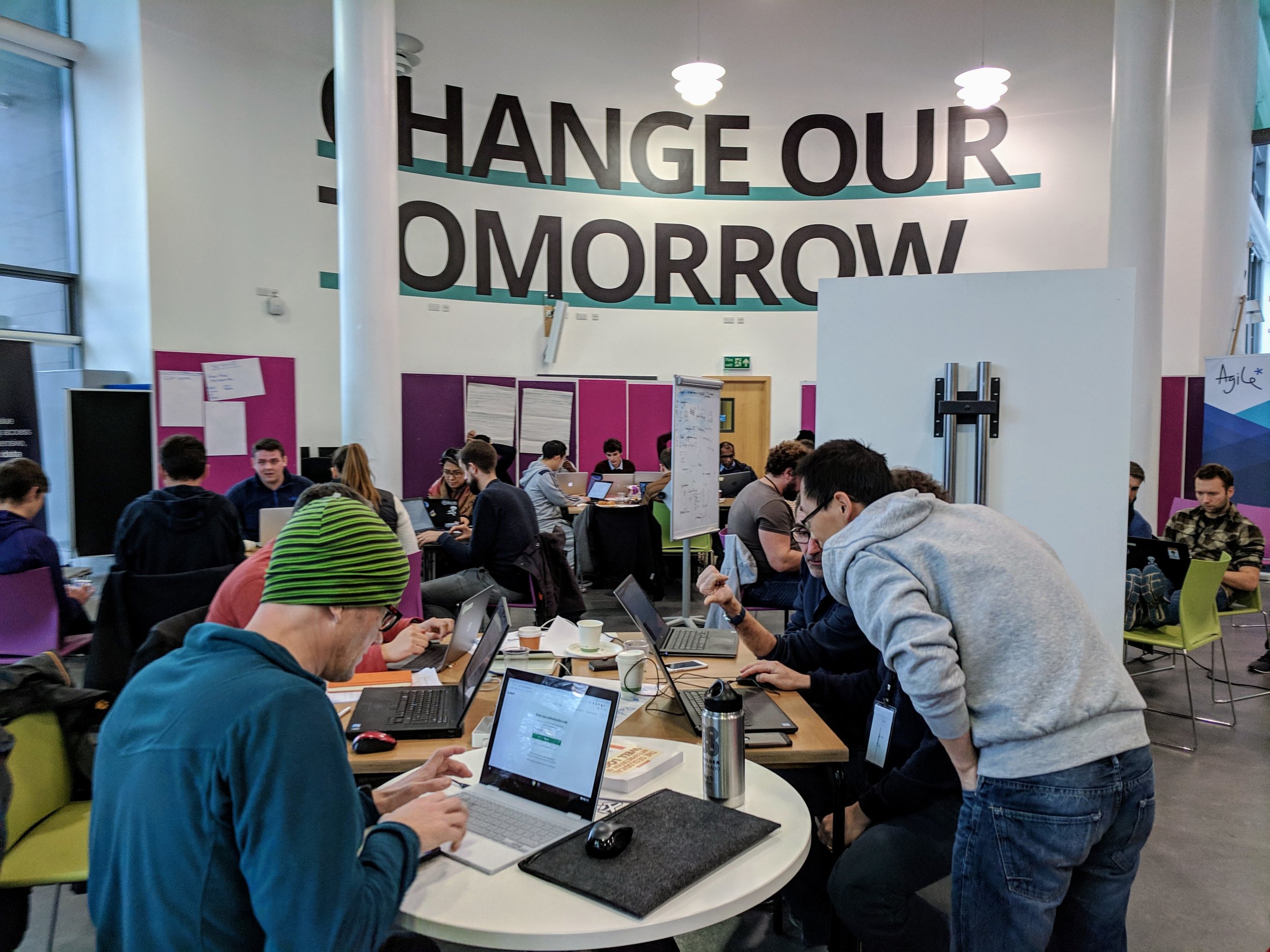

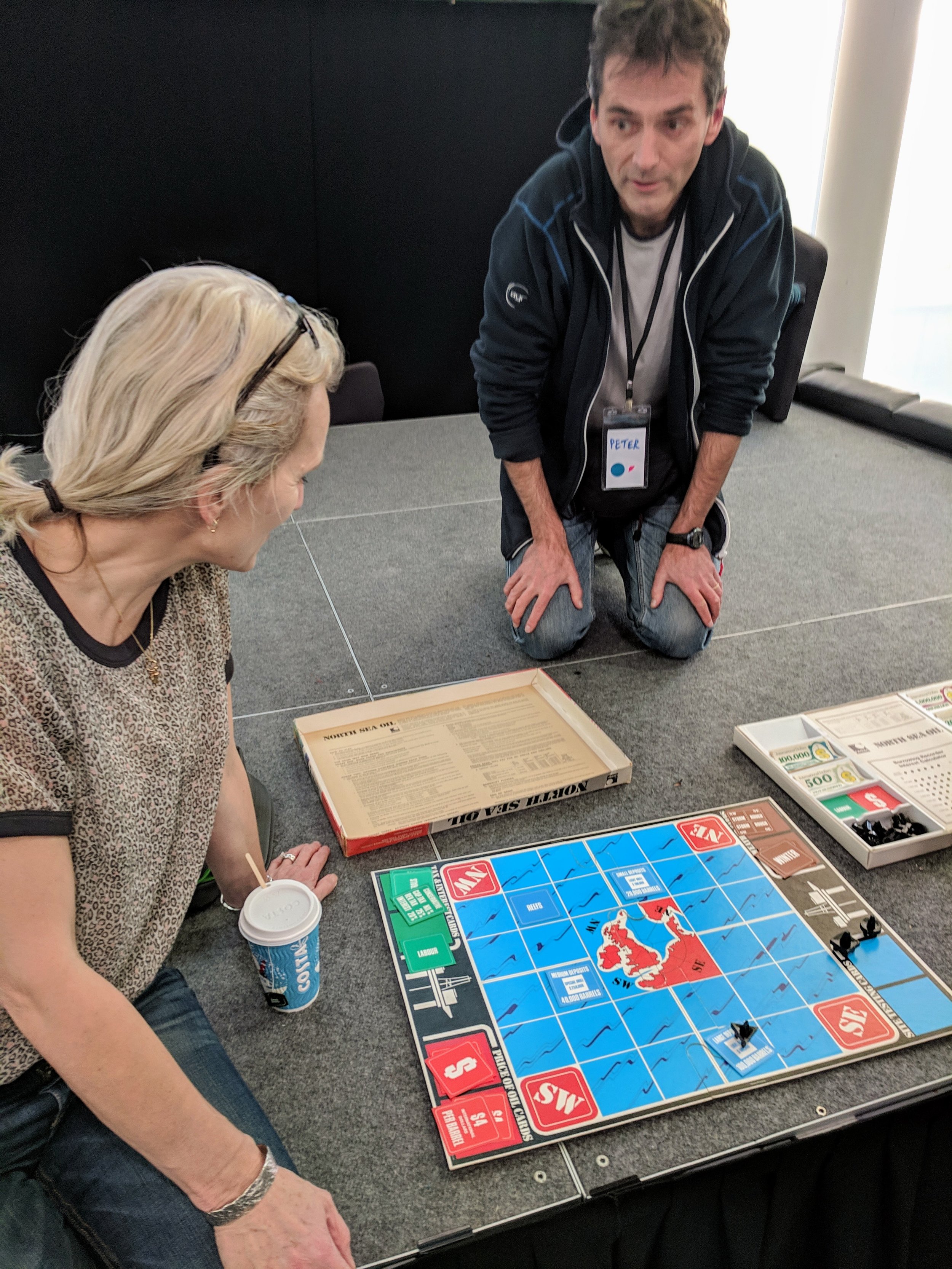

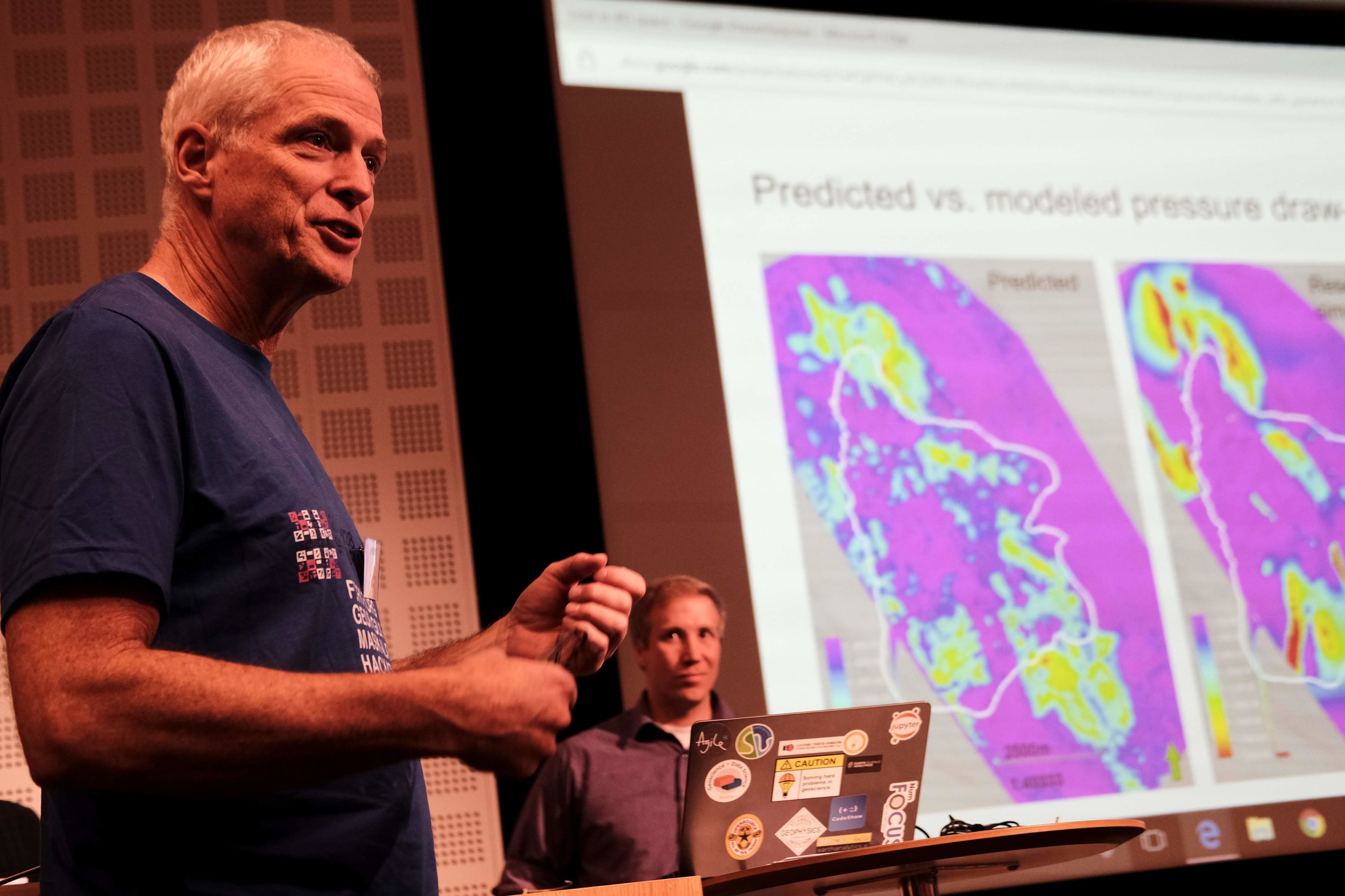

/On 16−18 November the UK Oil & Gas Authority (OGA) hosted its first hackathon, with Agile providing the format and technical support. This followed a week of training the OGA provided — again, through Agile — back in September. The theme for the hackathon was ‘machine learning’, and I’m pretty sure it was the first ever geoscience hackathon in the UK.

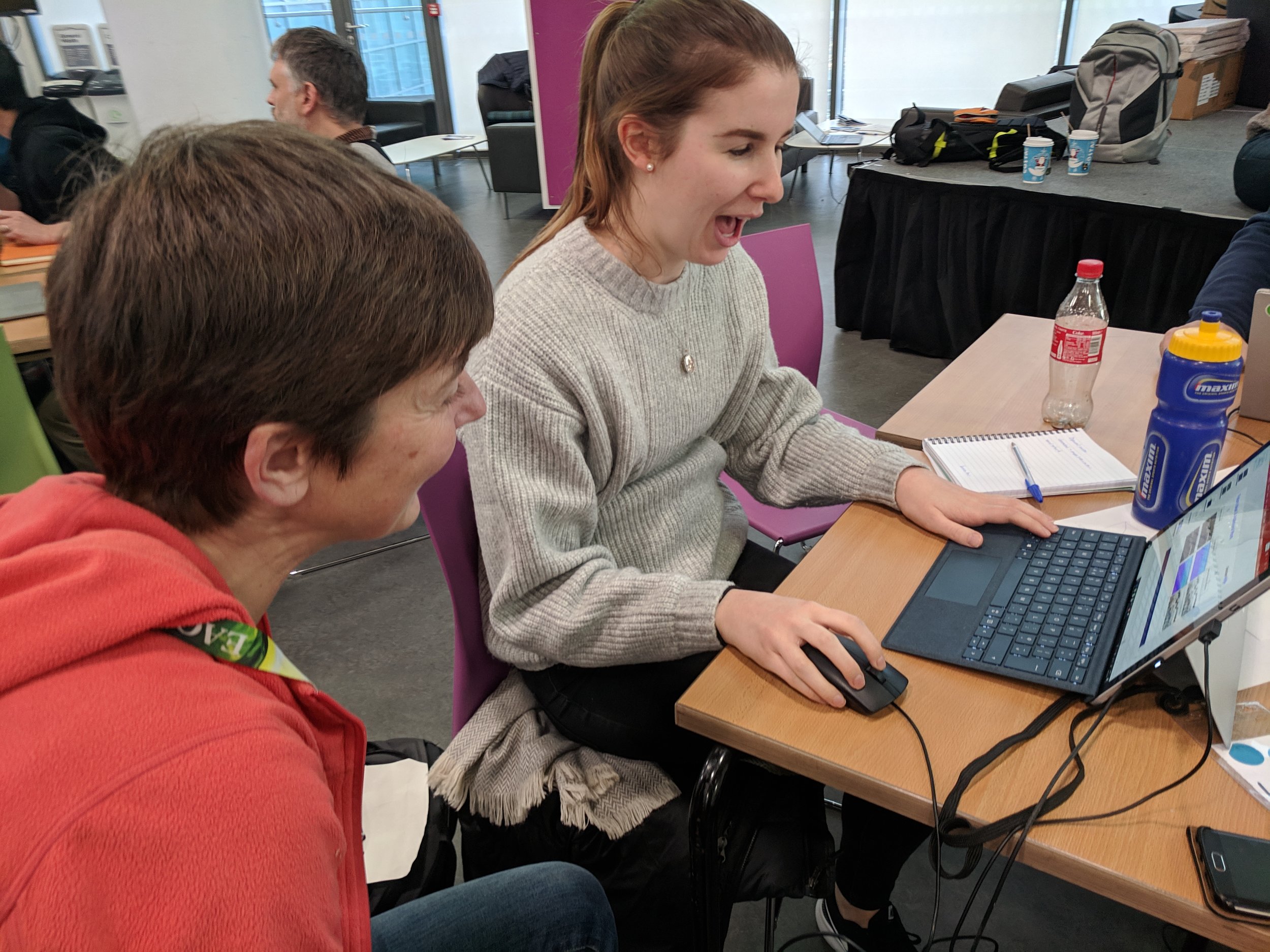

Thirty-seven digital geoscientists participated in the event at Robert Gordon University; most of them appear below. Many of them had not coded at all before the bootcamp on Friday, so a lot of people were well outside their comfort zones when we sat down on Saturday. Kudos to everyone!

The projects included the usual mix of seismic-based tasks, automated well log picking, a bit of natural language processing, some geospatial processing, and seals (of the mammalian variety). Here’s a rundown of what people got up to:

Counting seals on Scottish islands

Seal Team 6: Julien Moreau, James Mullins, Alex Schaaf, Balazs Kertesz, Hassan Tolba, Tom Buckley.

Project: Julien arrived with a cool dataset: over 6000 seals located on two large TIFFs images of Linga Holm, an island off Stronsay in the Orkneys. The challenge: locate the seals automatically. The team came up with a pipeline to generate HOG descriptors, train a support vector machine on about 20,000 labelled image tiles, then scan the large TIFFs to try to identify seals. Shown here is the output of one such scan, with a few false positive and false negatives. GitHub repo.

This project won the Most Impact award.

Automatic classification of seismic sections

Team Seis Class: Jo Bagguley, Laura Bardsley, Chio Martinez, Peter Rowbotham, Mike Atkins, Niall Rowantree, James Beckwith.

Project: Can you tell if a section has been spectrally whitened? Or AGC’d? This team set out to attempt to teach a neural network the difference. As a first step, they reduced it to a binary classification problem, and showed 110 ‘final’ and 110 ‘raw’ lines from the OGA ESP 2D 2016 dataset to a convolutional neural net. The AI achieved an accuracy of 98% on this task. GitHub repro.

This project won recognition for a Job Well Done.

Why do get blocks relinquished?

Team Relinquishment Surprise: Tanya Knowles, Obiamaka Agbaneje, Kachalla Aliyuda, Daniel Camacho, David Wilkinson (not pictured).

Project: Recognizing the vast trove of latent information locked up in the several thousand reports submitted to the OGA. Despite focusing on relinquishment, they quickly discovered that most of the task is to cope with the heterogeneity of the dataset, but they did manage to extract term frequencies from the various Conclusions sections, and made an ArcGIS web app to map them.

Recognizing reflection styles on seismic

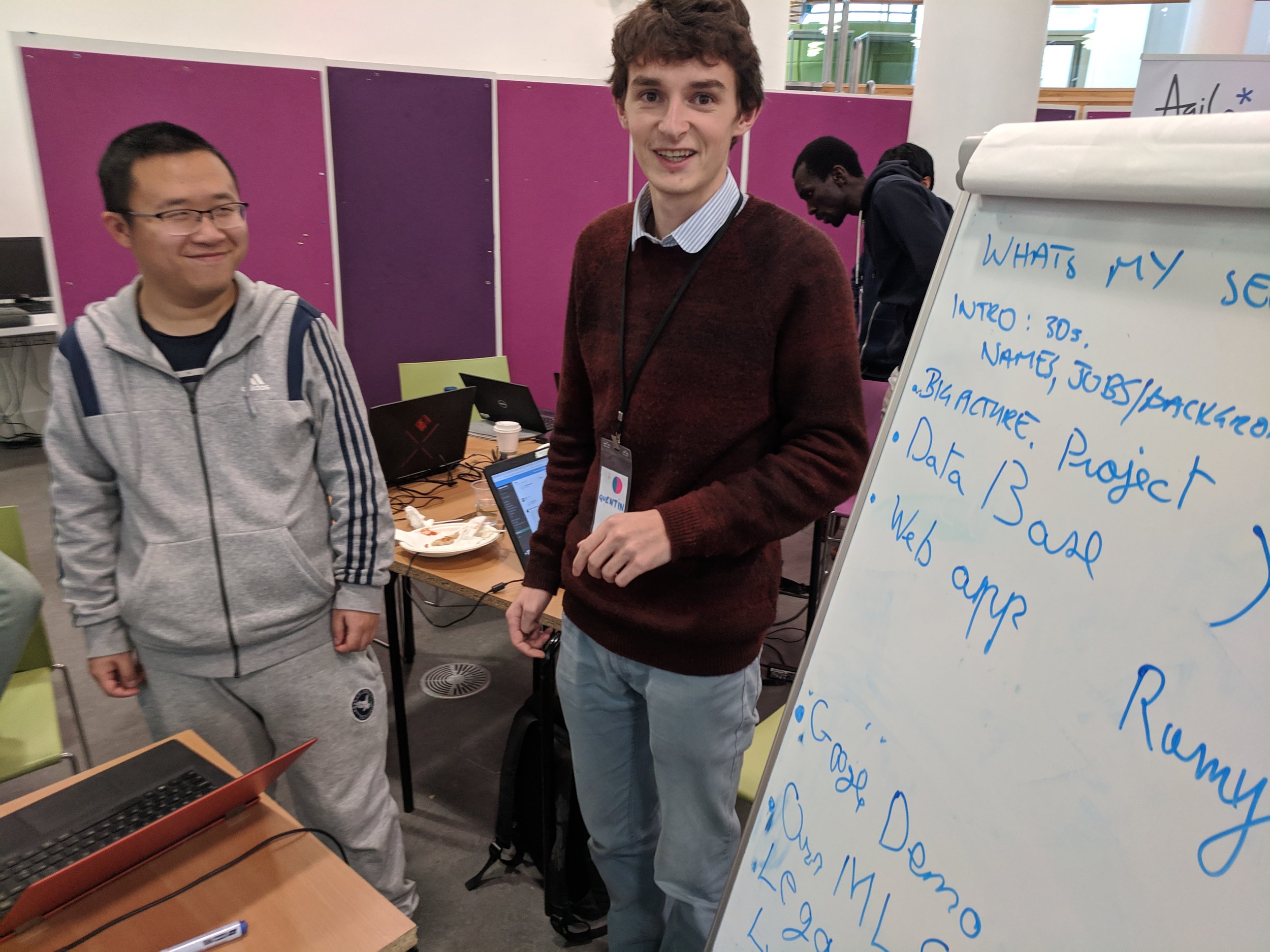

Team What’s My Seismic? Quentin Corlay, Tony Hallam, Ramy Abdallah, Zhihua Cui, Elia Gubbala, Amechi Halim.

Project: The team wanted to detect the presence of various seismic facies in a small segment of seismic data (with a view to later interpreting entire datasets). They quickly generated a training dataset, then explored three classifiers: XGBoost, Google’s AutoML, and a CNN. All of the methods gave reasonable results and were promising enough that the team vowed to continue investigating the problem. Project website. GitHub repo.

This project won the Best Execution award.

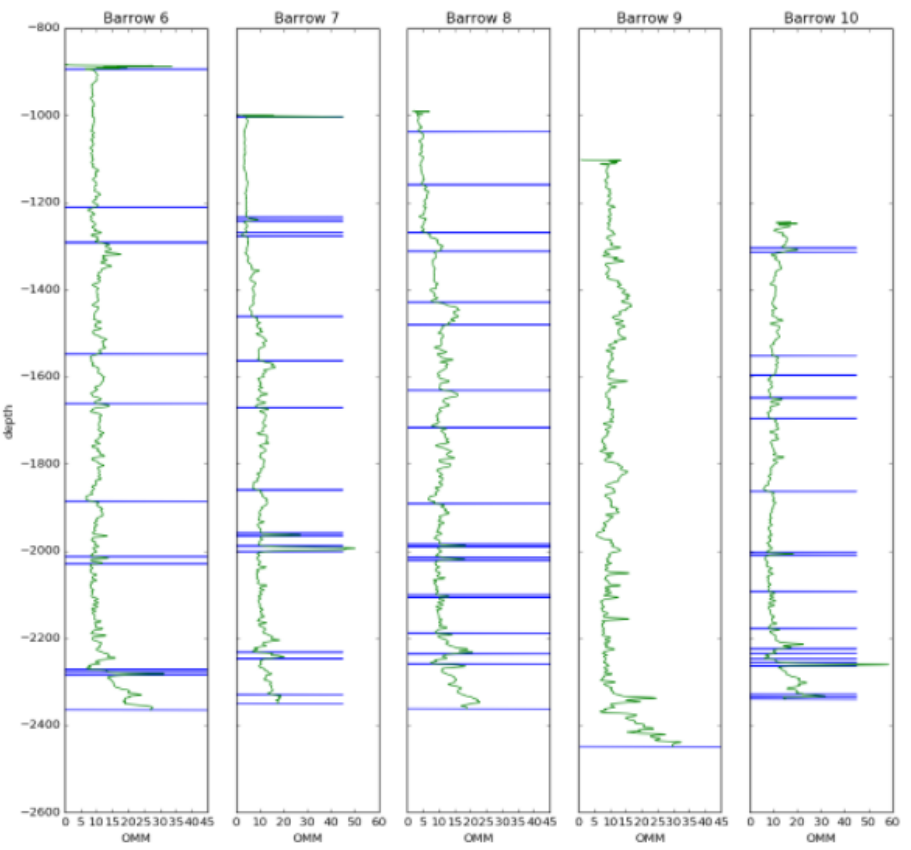

Stretchy-squeezey well log correlation

Team Dynamic Depth Warping: Jacqueline Booth, Sarah Weihmann, Khaled Muhammad, Sadiq Sani, Rahman Mukras, Trent Piaralall, Julio Rodriguez.

Project: Making picks and correlations in wireline data is hard, partly because the stratigraphic signal changes spatially — thinning and thickening, and with missing or extra sections. To try to cope with this, the team applied a dynamic time (well, depth) warping algorithm to the logs, then looking for similar sections in adjacent wells. The image shows a target GR log (left) with the 5 most similar sections. Two, maybe four, of them seem reasonable. Next the team planned to incorporate more logs, and attach probabilities to the correlations. Early results looked promising. GitHub repo.

Making lithostrat picks

Team Marker Maker: Nick Hayward, Frédéric Ramon, Can Yang, Peter Crafts, Malcolm Gall

Project: The team took on the task of sorting out lithostratigraphic well tops in a mature basin. But there are speedbumps on the road to glory, e.g. recognizing which picks are lithological (as opposed to chronological), and which pick names are equivalent. The team spent time on various subproblems, but there’s a long road ahead.

This project won recognition for a Job Well Done.

Alongside these projects, Rob and I floated around trying to help, and James Beckwith hacked on a cool project of his own for a while — Paint By Seismic, a look at unsupervised classification on seismic sections. In between generating attributes and clustering, he somehow managed to help and mentor most of the other teams — thanks James!

Thank you!

Thank you to The OGA for these events, and in particular to Jo Bagguley, whose organizational skills I much appreciated over the last few weeks (as my own skills gradually fell apart). The OGA’s own Nick Richardson, the OGTC’s Gillian White, and Robert Gordon Universty’s Eyad Elyan acted as judges.

These organizations contributed to the success of these events — please say Thank You to them when you can!

I’ll leave you with some more photos from the event. Enjoy!

Except where noted, this content is licensed

Except where noted, this content is licensed