The open subsurface stack

/Two observations:

Agile has been writing about open source software for geology and geophysics for several years now (for example here in 2011 and here in 2016). Progress is slow. There are lots of useful tools, but lots of gaps too. Some new tools have appeared, others have died. Conclusion: a robust and trusted open stack is not going to magically appear.

People — some of them representing large corporations — are talking more than ever about industry collaboration. Open data platofrms are appearing all over the place. And several times at the DigEx conference in Oslo last week I heard people talk about open source and open APIs. Some organizations, notably Equinor, seem to really mean business. Conclusion: there seems to be a renewed appetite for open source subsurface software.

A quick reminder of what ‘open’ means; paraphrasing The Open Definition and The Open Source Definition in a sentence:

“Open data, content and code can be freely used, modified, and shared by anyone for any purpose.”

The word ‘open’ is being punted around quite a bit recently, but you have to read the small print in our business. Just as OpenWorks is not ‘open’ by the definition above, neither is OpenSpirit (remember that?), nor the Open Earth Community. (I’m not trying to pick on Halliburton but the company does seem drawn to the word, despite clearly not quite understanding it.)

The conditions are perfect

Earlier I said that a robust and trusted ‘stack’ (a collection of software that, ideally, does all the things we need) is not going to magically appear. What do I mean by ‘robust and trusted’? It goes far beyond ‘just code’ — writing code is the easy bit. It means thoroughly tested, carefully documented, supported, and maintained. All that stuff takes work, and work takes people and time. And people and time mean money.

Two more observations:

Agile has been teaching geocomputing like crazy — 377 people in the last year. In our class, the participants install a lot of Python libraries, including a few from the open subsurface stack: segyio, lasio, welly, and bruges. Conclusion: a proto-stack exists already, hundreds of users exist already, and some training and support exist already.

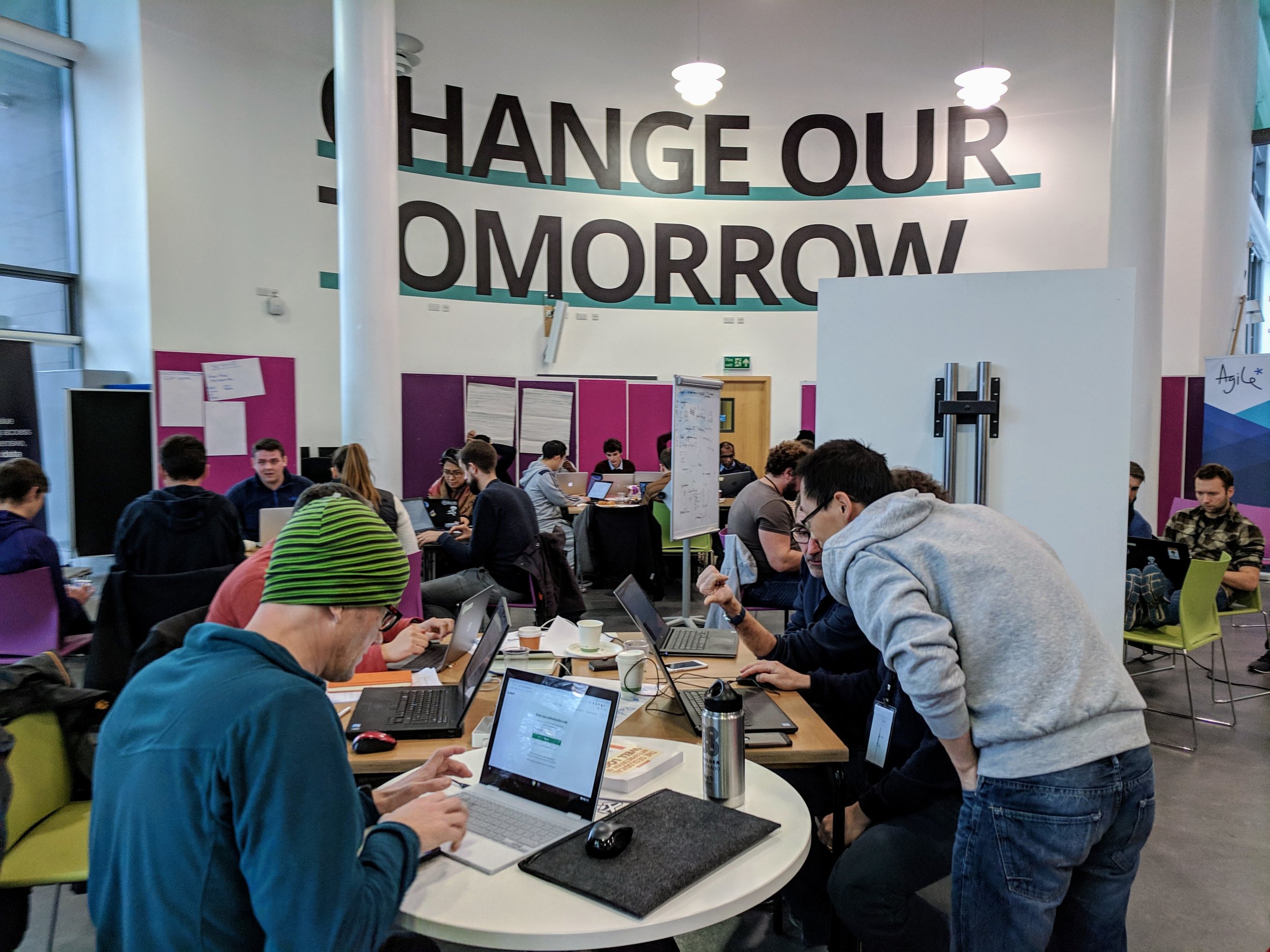

The Software Underground has over 1200 members (you should sign up, it’s free!). That’s a lot of people that care passionately about computers and rocks. The Python and machine learning communies are especially active. Conclusion: we have a community of talented scientists and developers that want to get good science done.

So what’s missing? What’s stopping us from taking open source subsurface tech to the next level?

Nothing!

Nothing is stopping us. And I’ve reached the conclusion that we need to provide care and feeding to this proto-stack, and this needs to start now. This is what the TRANSFORM 2019 unconference is going to be about. About 40 of us (you’re invited!) will spend five days working on some key questions:

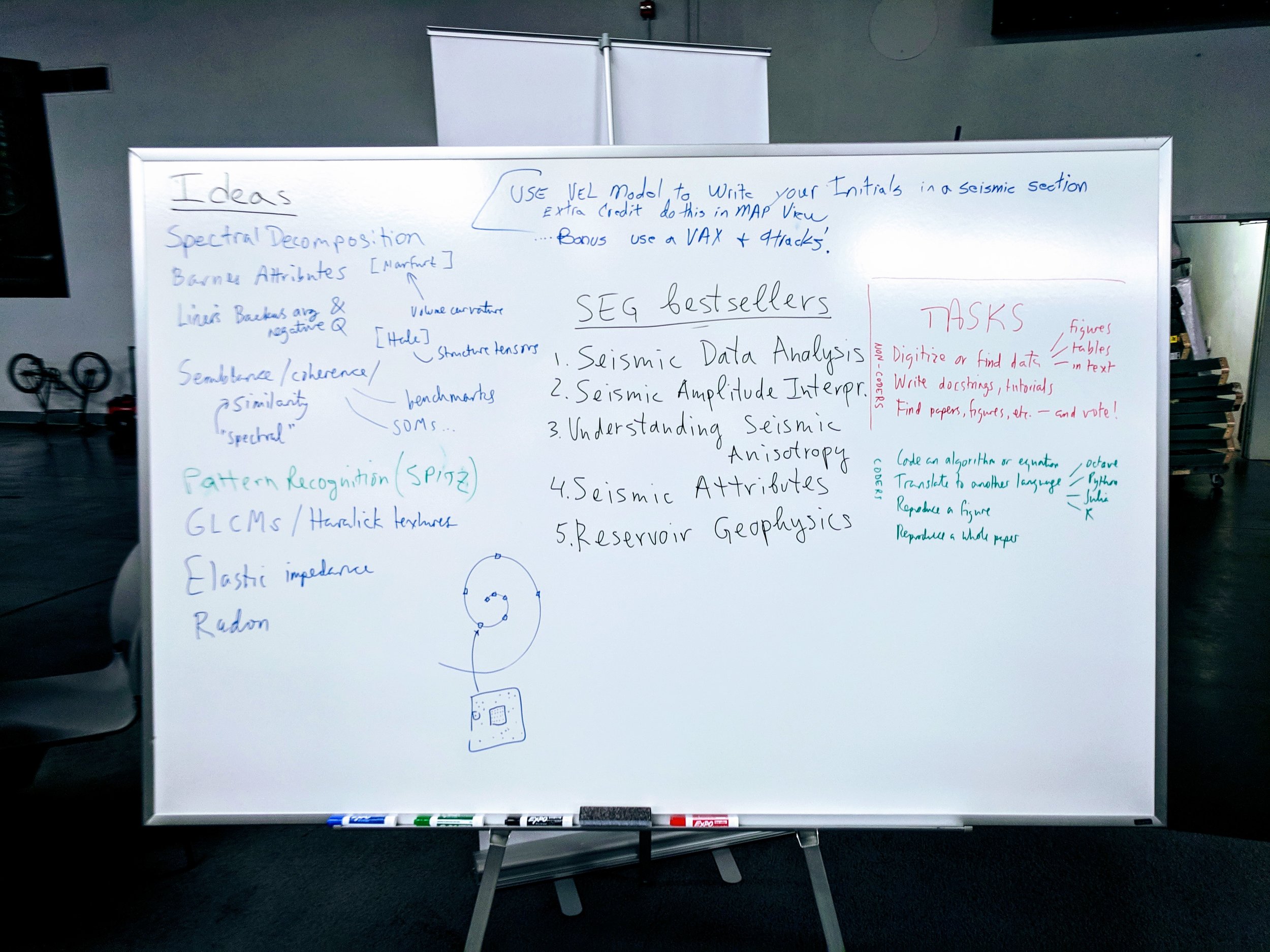

What libraries are in the Python ‘proto-stack’? What kind of licenses do they have? Who are the maintainers?

Do we need a core library for the stack? Something to manage some basic data structures, units of measure, etc.

What are we calling it, who cares about it, and how are we going to work together?

Who has the capacity to provide attention, developer time, existing code, or funds to the stack?

Where are the gaps in the stack, and which ones need to be filled first?

We won’t finish all this at the unconference. But we’ll get started. We’ll produce a lot of ideas, plans, roadmaps, GitHub issues, and new code. If that sounds like fun to you, and you can contribute something to this work — please come. We need you there! Get more info and sign up here.

Read the follow-up post >>> What’s happening at TRANSFORM?

Thumbnail photo of the Old Man of Hoy by Tom Bastin, CC-BY on Flickr.

Except where noted, this content is licensed

Except where noted, this content is licensed